Predicting season records for NFL teams - overview

This is the first, non-technical, part of this series. See the second part for more detail.

Introduction

I was recently looking for a good machine learning task to try out, and I thought that doing something NFL-related would be interesting, because the NFL season is about to start (finally!).

Why was I looking for a good machine learning task to try out? I have mostly done my data analysis work in R, but recently, I have been moving over to Python. As part of that process, trying as many real-world problems out as possible helps. I have also been developing a lightweight, modular, machine learning framework with my company, Equirio.

We are going to start with a high-level, nontechnical overview of what we will be doing, and then follow that up with some technical details in a second post.

High Level Overview

Machine learning description

In machine learning, the goal is to learn from data and a known outcome to predict an unknown outcome for future data. For example, let’s say that we have data for how hot it has been for the past 10 days, and we want to predict how hot it will be tomorrow. The data (how hot it has been for the past 10 days), and the outcome (how hot it will be tomorrow), will be somewhat correlated. So, we can take data and outcomes from the past (ie, how hot it was for the 10 days before today, and how hot it was today), and use it to predict how hot it will be tomorrow. This will not be a perfect prediction, and we will have some error, as we do not have all of the needed information. Maybe there is a cold front coming in from the north, but in our simple model, we don’t have that information.

How does this apply to NFL data?

In my case, I wanted to predict something NFL-related. One of the main problem in doing this kind of analysis, believe it or not, is easy access to data. It is harder to get detailed per-game data such as who did what on what play, or even season-level statistics per player.

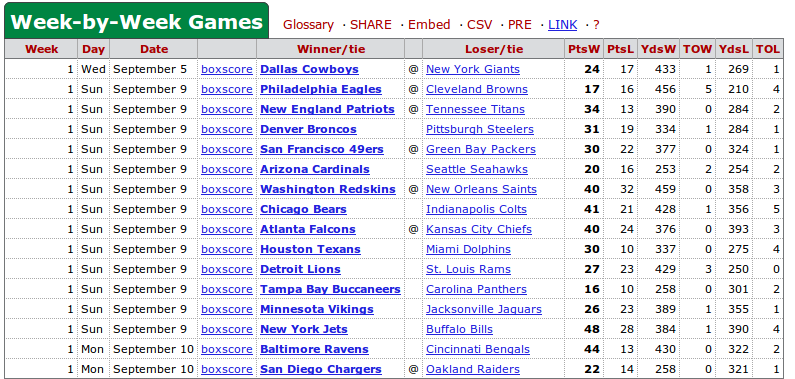

What is fairly easy to get is box score data, such as the below from Pro football reference.

You can see that it is very generic information: for each game, we have the winner, the loser, who was at home, how many points each team had, how many turnovers each team had, and how many yards each team gained.

You can see that it is very generic information: for each game, we have the winner, the loser, who was at home, how many points each team had, how many turnovers each team had, and how many yards each team gained.

Given this basic information, one of the simplest things we can predict is a teams win/loss record in a given season.

Before we get started

Before we get started, we have to define an error metric and a baseline. For example, if the algorithm predicts that the Washington Redskins will win 6 games next year, and they actually win 7 games, was the algorithm good?

To answer this, we need some baseline to measure against. First, we will define the error metric. The error metric will just be the mean of the absolute value of all the predictions minus all of the actual results.

Let’s take the 2010 season (unfortunately, these tables may look bad in an RSS reader):

next_year_wins team year total_wins 1440 14 new orleans saints 2010 11 1441 12 pittsburgh steelers 2010 14 1442 15 new england patriots 2010 14 1443 4 tampa bay buccaneers 2010 10 1444 8 philadelphia eagles 2010 10 1445 2 st. louis rams 2010 7 1446 10 atlanta falcons 2010 13 1447 4 cleveland browns 2010 5 1448 9 cincinnati bengals 2010 4 1449 8 oakland raiders 2010 8 1450 6 buffalo bills 2010 4 1451 13 new york giants 2010 10 1452 15 green bay packers 2010 14 1453 9 denver broncos 2010 4 1454 6 carolina panthers 2010 2 1455 10 detroit lions 2010 6 1456 0 tennessee oilers 2010 0 1457 0 st. louis cardinals 2010 0 1458 8 chicago bears 2010 12 1459 0 phoenix cardinals 2010 0 1460 14 san francisco 49ers 2010 6 1461 2 indianapolis colts 2010 10 1462 5 washington redskins 2010 6 1463 7 seattle seahawks 2010 8 1464 8 arizona cardinals 2010 5 1465 11 houston texans 2010 6 1466 9 tennessee titans 2010 6 1467 5 jacksonville jaguars 2010 8 1468 0 los angeles rams 2010 0 1469 8 san diego chargers 2010 9 1470 6 miami dolphins 2010 7 1471 8 new york jets 2010 13 1472 0 baltimore colts 2010 0 1473 13 baltimore ravens 2010 13 1474 7 kansas city chiefs 2010 10 1475 0 boston patriots 2010 0 1476 0 houston oilers 2010 0 1477 0 los angeles raiders 2010 0 1478 3 minnesota vikings 2010 6 1479 8 dallas cowboys 2010 6

We can see each team, along with how many games it won in 2010 (total_wins), and how many it won in 2011 (next_year_wins). Let’s say that we predict that each team will win the same amount of games in 2011 as it won in 2010. Thankfully, we already know how many games each team won, so we can use our error metric to calculate the error.

Once we remove the 2012 season (we don’t know what the wins will be next year), and any teams with 0 victories (teams that do not exist anymore), we can calculate the total error for all of the seasons. The error comes out to be 3.1. So, if we just assume that teams will win as many games next year, the actual number will, on average be +/- 3.1 games away.

Let’s try another baseline. It’s well known that teams tend to regress towards the mean. So, let’s just go with 8 as the number of victories for every team (in a 16 game season, 8 would be average). If we do this, we actually get a better result. The error is now only 2.8. Let’s use this as our baseline. If our system can reduce the error, than we can say that our system is potentially useful.

Training and Prediction

So, we take as much past data as we can (I used data from 1980 to now), convert per-game data into per-season data by calculating a lot of features for each team, such as how many points the team scored in their last 5 games of the season, or opponent record for the season. A feature is basically a decision criteria. If I want to know if it will be sunny tomorrow, one data point that I might want is if it is sunny or not today.

We can then train our machine learning model, and evaluate its error. Our error here is 2.6, which is better than the baseline.

We can then use our model to predict how teams will perform in future seasons (in this case, 2013).

After our training, we get predictions, which come out to:

team year total_wins predicted_2013_wins 1544 arizona cardinals 2012 5 5.95 1526 atlanta falcons 2012 14 9.63 1552 baltimore colts 2012 0 0.00 1553 baltimore ravens 2012 14 9.91 1555 boston patriots 2012 0 0.00 1530 buffalo bills 2012 6 7.16 1534 carolina panthers 2012 7 7.85 1538 chicago bears 2012 10 9.43 1528 cincinnati bengals 2012 10 8.65 1527 cleveland browns 2012 5 6.56 1559 dallas cowboys 2012 8 8.27 1533 denver broncos 2012 13 9.49 1535 detroit lions 2012 4 7.33 1532 green bay packers 2012 12 10.87 1556 houston oilers 2012 0 0.00 1545 houston texans 2012 13 10.00 1541 indianapolis colts 2012 11 9.17 1547 jacksonville jaguars 2012 2 6.05 1554 kansas city chiefs 2012 2 6.35 1557 los angeles raiders 2012 0 0.00 1548 los angeles rams 2012 0 0.00 1550 miami dolphins 2012 7 7.74 1558 minnesota vikings 2012 10 8.71 1522 new england patriots 2012 13 11.44 1520 new orleans saints 2012 7 8.10 1531 new york giants 2012 9 8.20 1551 new york jets 2012 6 6.65 1529 oakland raiders 2012 4 6.55 1524 philadelphia eagles 2012 4 6.84 1539 phoenix cardinals 2012 0 0.00 1521 pittsburgh steelers 2012 8 9.41 1549 san diego chargers 2012 7 7.81 1540 san francisco 49ers 2012 14 9.72 1543 seattle seahawks 2012 12 9.06 1537 st. louis cardinals 2012 0 0.00 1525 st. louis rams 2012 7 6.36 1523 tampa bay buccaneers 2012 7 7.25 1536 tennessee oilers 2012 0 0.00 1546 tennessee titans 2012 6 6.93 1542 washington redskins 2012 10 9.12